Check out the last newsletter:

Game Theory, AI Context, and a Gmail Game-changer

Greetings, friends.

Here is your weekly dose of 4-Good Finds, a list of what I’m learning and testing.

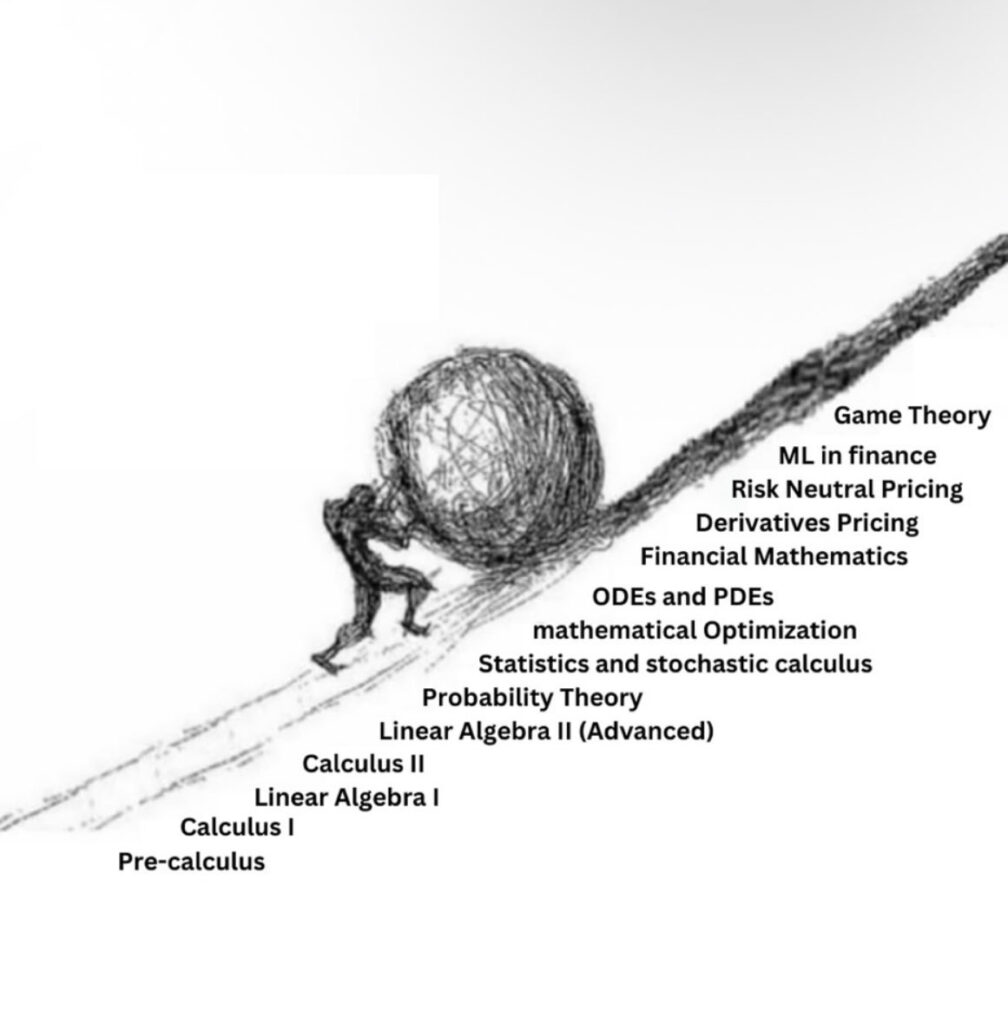

1/ What I am learning

Game theory is the mathematical study of strategies.

If you’re playing Monopoly one day and decide you want to work out, mathematically, exactly what the best decisions at every phase of the game would be, then you would be creating a work of game theory.

It doesn’t have to be a board game, though, just any situation where people are making decisions in pursuit of goals. You study the situation, the odds, the decisions people make, work out which would be optimal, then look at what people actually do.

So the situations game theory might study include optimal betting strategies in poker, or nuclear weapons deterrence strategies between nations, applying many of the same concepts to both.

The most powerful lesson from game theory is that the most important parts of life: career, business, and relationships, are not zero-sum games. Stop fighting for a bigger slice of a fixed pie. Start working with others to bake a bigger pie for everyone. The best way to win is to create more winning for all.

I started to learn Game Theory this week with this free playlist from Yale.

> critical for economics

> some brain work-out

> it’s just fun

> high signal

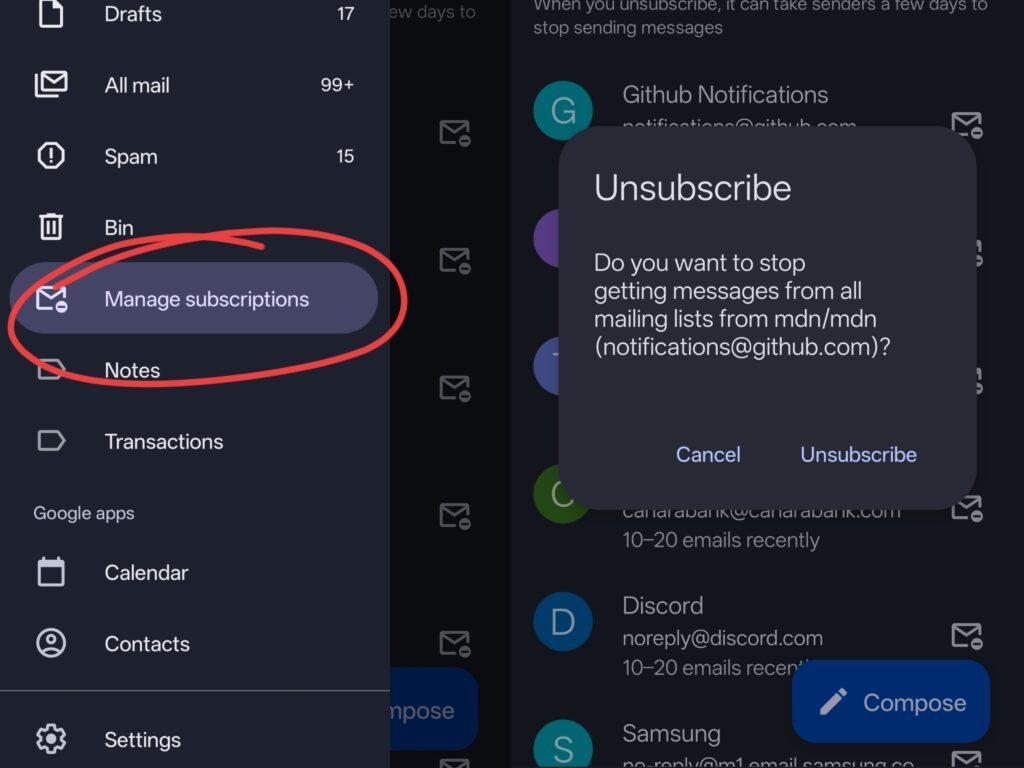

2/ Game changer

You can now clean your email on Gmail from all the promotional junk in one click. I am not kidding!

It only takes about 2 minutes now of clicking unsubscribe on all marketing emails you receive to change your inbox (and your life) forever.

3/ Quote I’m pondering

You want to go through every day by learning something. It’s a good mental discipline to have. A year goes by, and you have learned a great deal.

– Li Lu in His Own Words

Another idea that is similar to advice from Charlie Munger: “I constantly see people who rise in life who are not the smartest, sometimes not even the most diligent, but they are learning machines. They go to bed every night anda little wiser than they were when they got up, and boy does that help, particularly when you have a long run ahead of you.”

4/ What I am reading

I read Anthropic’s guide on effective context engineering for AI agents.

It rewires how you think about context, with beautiful, simple explanations.

Here’s what you need to know:

- Context Rot is real. As the number of tokens in the context window grows, the model’s ability to accurately recall info from that context drops. Happens with every LLM, even with a 1M context window.

- Prompt and Context engineering don’t contradict. They go hand in hand. Prompt engineering is about writing clear instructions to get the output you want. Context engineering is more programmatic, the art and science of filling the context window with just the right info.

- System prompts drive the whole performance of your model. A good system prompt balances it out: specific enough to guide behavior, but flexible enough to give the model strong heuristics. Don’t make it too vague or too restrictive.

- Tools must have clear names, separation of concerns, solid error handling, and return results without being too verbose. If a human engineer can’t say which tool to use in a situation, an AI agent won’t either.

- Providing examples in prompts and tool descriptions helps. But it has to be a set of diverse examples that add something. “Teams will often stuff a laundry list of edge cases into a prompt trying to cover every rule the LLM should follow for a task. We don’t recommend this.”

- It pays off to spend time pre-processing to get the right info to the LLM. It’s fine. Otherwise, dumping info into context backfires. Without guidance, an agent wastes context by misusing tools, chasing dead ends, or missing key info.

Common context engineering techniques:

Compaction: Have the agent summarize its message history when you’re near the context limit. The art is in which critical info you keep: unresolved issues, overall goal, execution steps, etc.

Structured note-taking: the agent can jot down notes outside the context window and pull them in when needed.

Sub-agent architectures: if your main agent needs to do a task that’s not critical to the main workflow, have it spawn sub-agents, return results, then dump their context. Your main agent might search for info -> call a sub-agent to search -> get the results. Keeps the main context clean.

That is all.

Have a wonderful weekend, all.

Much love to you and yours,

Abi